You’ve invested in AI. The pilots showed promise, leadership expects results, and budgets are already spent. But progress stalls because the data is messy, scattered across teams, inconsistent, and unreliable. Instead of ROI, you’re stuck explaining delays.

The issue isn’t the AI tools. It’s the foundation. Fragmented data makes scaling nearly impossible. The way forward is clear: turn the chaos into structured, governed, AI-ready assets. Feeding fundamental data to AI systems is what unlocks performance and measurable outcomes. You don’t need advanced coding skills or a specialist team to begin—only a practical strategy that aligns stakeholders, safeguards compliance, and builds the infrastructure for real-time use.

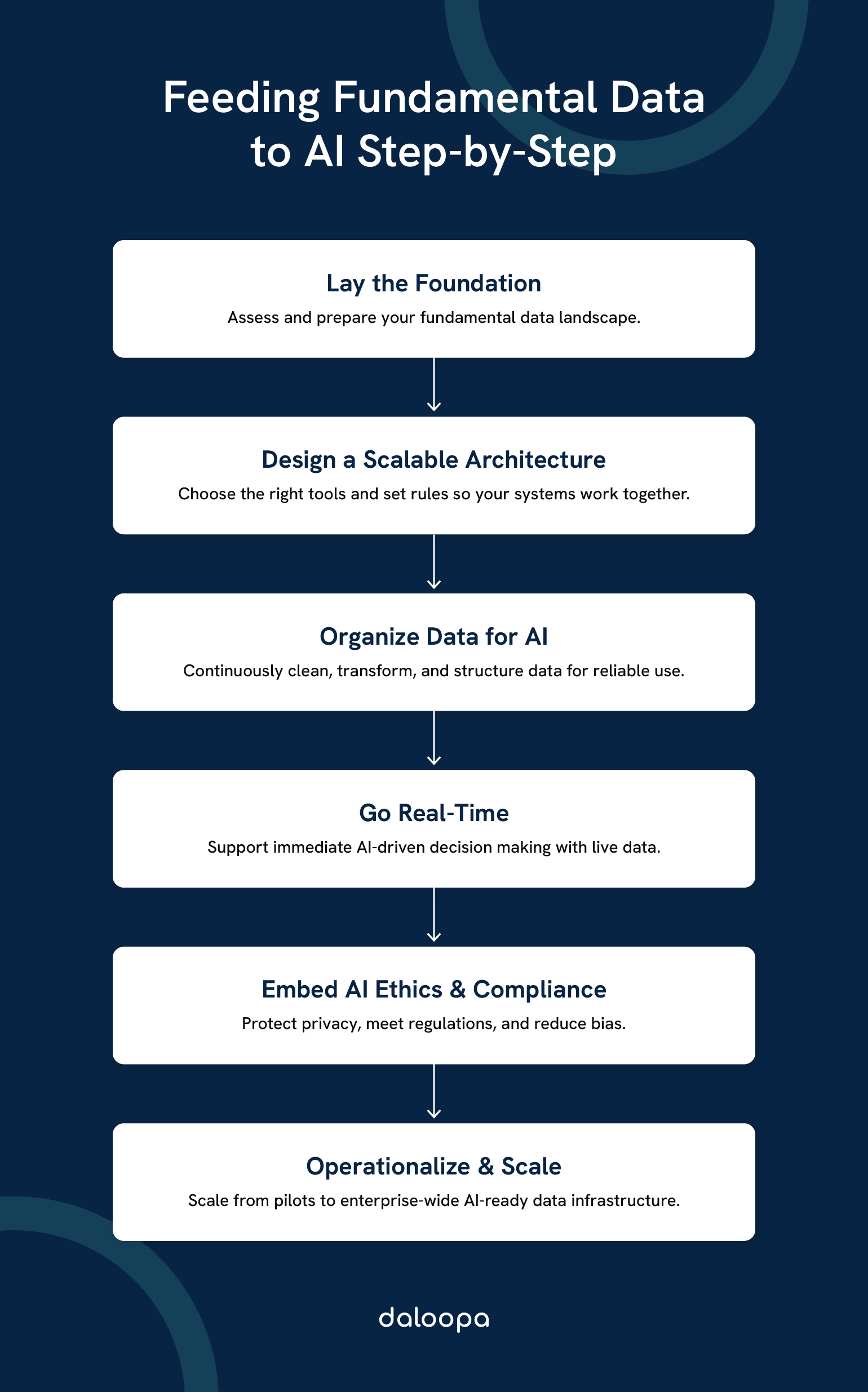

This integration guide provides a step-by-step roadmap to establish a reliable pipeline that continuously feeds AI the quality data it requires. With that structure in place, you can move beyond small pilots and realize the business impact your AI investments were meant to deliver.

1. Understanding the AI Data Foundation

A successful AI initiative depends on how your data is prepared, structured, and managed before reaching models. Even the most sophisticated machine learning tools fall short without a consistent data foundation, producing unreliable or misleading outcomes.

The Critical Role of Quality Data in AI Success

AI learns from patterns in information, so the quality of that information sets the ceiling for accuracy. Inaccurate or inconsistent records can lead to flawed predictions that erode trust among teams relying on insights.

Your focus should stay on data accuracy, completeness, and consistency long before scaling predictive analytics. That means fixing errors, eliminating duplicates, and standardizing formats across departments so information feeds into one shared system.

Poor data quality translates directly into missed revenue opportunities, like inaccurate lead scores leading to wasted sales effort, or incomplete transaction data skewing churn predictions.

For finance teams specifically, this challenge becomes even more critical when dealing with fundamental data that influences investment decisions and risk models. Daloopa’s comprehensive fundamental data platform addresses this by providing clean, standardized financial datasets that are already AI-ready. This eliminates the time-consuming data preparation phase that often derails AI initiatives in the finance sector.

In practice, feeding fundamental data to AI at this stage ensures every model is built on trustworthy foundations, reducing errors before they scale.

One effective way to enforce standards is to build a data quality framework that tracks key indicators:

| Metric | Why It Matters | Example Issue |

| Accuracy | Predictions reflect real conditions | Wrong customer addresses |

| Completeness | Prevents gaps in training data | Missing transaction records |

| Consistency | Avoids conflicting interpretations | Different date formats |

When you continuously monitor quality standards, you don’t just improve model reliability. You protect efficiency in the pipeline, forecasting accuracy, and operational planning.

Assessing Your Current Data Landscape

Before moving forward with AI, you need clarity on the data you already manage. That starts with identifying the sources, formats, and flows spread across your business systems.

Valuable information often sits inside CRM tools, ERP platforms, spreadsheets, or third-party apps, yet remains disconnected.

For example, customer activity data may live in your CRM while payment history is siloed in ERP. Without connecting them, a churn model only sees partial behavior and over- or underestimates risk.

Questions worth asking include:

- Which sources are cut off in silos?

- How frequently are records refreshed?

- What governance rules already exist for accuracy and access?

An audit of your current landscape often reveals gaps such as outdated records, missing customer activity, or inconsistent reporting. Documenting these gaps makes it easier to design integration steps that align with AI-driven goals.

By mapping everything clearly, you avoid wasting time and budget training models on incomplete or irrelevant datasets.

Establishing Clear AI-Driven Business Objectives

AI efforts succeed only when tied to specific outcomes. Objectives provide direction and ensure data preparation supports measurable business goals rather than vague experimentation.

The best approach is to link AI directly to defined operational or revenue priorities. That might mean boosting the accuracy of lead scoring, reducing churn prediction errors, or improving supply forecasting.

Objectives also highlight which datasets deserve priority. For example, if customer retention is the focus, then transaction history, engagement metrics, and support logs matter more than unrelated operational files.

Clear objectives prevent over-collection. Instead of hoarding every record, your team targets the data that strengthens the models supporting those goals.

By aligning preparation with outcomes, AI delivers not just insights but measurable results tied to growth.

2. Building Your Data Integration Strategy

Data must flow consistently, securely, and at scale if AI is to work in practice. Building the right integration strategy requires aligning infrastructure, governance, and technology so systems feed fundamental data to AI reliably without redundancy or conflict.

Designing a Scalable Data Architecture for Feeding Fundamental Data to AI

A scalable data architecture ensures that growth in both structured and unstructured sources doesn’t force constant redesigns. Flexibility comes from supporting cloud-native platforms and on-premise systems with hybrid models that handle both modern and legacy sources.

Pipelines must be designed to feed fundamental data to AI in real time alongside batch updates. Streaming website activity, for example, provides instant insights, while quarterly financial data ingested through fundamental financial data API keeps forecasts precise.

Design pipelines to support real-time ingestion alongside batch updates. For instance, streaming website activity into the pipeline gives sales teams immediate feedback on lead engagement, while batch uploads of quarterly financial data keep forecasting accurate without straining resources.

Important considerations include:

- Data storage models (warehouses vs. lakes)

- Connectors and APIs that guarantee interoperability

- Automation layers for monitoring and error handling

By favoring modular design, you can add, swap, or scale components without disrupting the full system. This keeps expansion predictable and costs manageable.

Establishing Robust Data Governance Frameworks

Governance ensures accuracy, consistency, and accountability throughout the lifecycle of data. Without it, models risk being trained on biased, outdated, or incomplete inputs. Strong governance defines ownership, access, and retention rules so your team understands responsibilities from the outset.

You should define and monitor standards for quality—like timeliness, completeness, and validity. Automated validation checks can catch issues before they feed downstream, reducing the chance of faulty AI outputs.

Governance also supports compliance. Regulations such as GDPR or HIPAA require transparency. Documenting lineage—where information comes from, how it is transformed, and who accessed it—satisfies those obligations and supports audits.

Robust and flexible technologies like Daloopa’s MCP can simplify compliance, especially in the finance industry. Daloopa integrations provide AI models with direct access to verified fundamental data with hyperlinks to the data sources, maintaining complete audit trails and compliance standards.

Selecting the Right Integration Tools and Technologies

The tools you choose dictate the efficiency of your integration. The best options support both ETL (extract, transform, load) and ELT approaches, providing flexibility for on-premise or cloud-first environments.

When evaluating platforms, weigh factors such as:

- Scalability to handle expanding data volumes.

- Pre-built connectors for common systems like CRM or ERP.

- Automation features to reduce manual overhead.

- Monitoring dashboards for visibility and health checks.

The key question is business impact: does this tool reduce time-to-insight? For example, data virtualization means RevOps can access pipeline data from multiple CRMs without waiting weeks for IT to consolidate it, cutting sales forecasting cycles significantly.

For mid-sized enterprises, hybrid platforms provide strong value, bridging cloud and on-premise systems with fewer disruptions. Selecting tools with strong vendor support and active user communities makes long-term management smoother.

By aligning technology with governance and architecture choices, you set a base that supports current needs while preparing for long-term growth.

3. Data Preparation and Processing

Preparing data for AI means cleaning, transforming, and organizing it so models can actually learn. That involves correcting errors, aligning formats, and creating repeatable processes to keep flows reliable from ingestion to deployment.

When preparation is consistent, it ensures that every pipeline feeds fundamental data to AI in a way that supports accuracy, compliance, and scalability. Organizations that prioritize data quality for AI systems reduce drift, improve trust, and deliver forecasts leadership can rely on.

Daloopa simplifies this by combining a fundamental financial data API with pipelines that can continuously feed fundamental data to AI without manual wrangling.

Data Cleaning and Standardization Techniques

Legacy systems, spreadsheets, and disconnected apps often create messy inputs with duplicates, inconsistent fields, and missing values that damage accuracy. Cleaning begins with deduplication, validation checks, and handling null values either through imputation or removal.

Standardization follows, ensuring consistency across structured and unstructured formats. Customer addresses, for instance, should follow one format, while free-text entries may require tokenization or normalization. Referencing standards such as ISO date formats or FHIR in healthcare cuts ambiguity and improves cross-system compatibility.

Automated scripts or quality tools enforce these rules at ingestion, stopping errors before they spread downstream. That means fewer “garbage in, garbage out” problems and more confidence in metrics leadership depends on, like churn prediction or quarterly forecasts.

Data Transformation for AI Readiness

Once cleaned, data needs reshaping to fit AI workflows. That involves encoding categorical values, scaling variables, and transforming text or images into numerical formats models can understand.

Structured data often requires normalization or aggregation, while unstructured sources, such as customer emails, may need natural language preprocessing like stemming or entity recognition. Time-series data demands aligned timestamps across systems to avoid misleading interpretations.

Another option is data virtualization, which provides access to diverse sources without duplicating them physically. This allows transformations in real time while avoiding storage bloat. By setting up repeatable pipelines, you ensure consistent readiness for every dataset entering AI.

Building Efficient Data Pipelines

Efficient pipelines automate the flow from ingestion to model-ready storage with minimal manual effort. Common steps include extraction, validation, transformation, and loading into training environments.

Feedback loops can monitor drift and trigger updates when patterns shift, keeping models accurate as business conditions change.

Modern practices favor modular pipelines that combine APIs, batch processing, and streaming. For example:

| Stage | Purpose | Example Tools/Methods |

| Ingestion | Collect raw data | ETL, APIs, event streams |

| Processing | Clean and transform inputs | Spark, Python scripts |

| Storage/Access | Serve AI-ready data | Data warehouses, lakes |

Automating these flows reduces latency, ensures consistency, and gives teams organization-wide access to data they can trust. This, in turn, translates into faster reporting cycles, real-time visibility into revenue pipelines, and fewer surprises when presenting to the board.

4. Implementing Real-Time Data Capabilities

For AI to impact day-to-day decisions, infrastructure must capture, process, and validate information as it happens. That means building systems that continuously feed fundamental data to AI applications without interruption. Focus on building flows that maintain accuracy while scaling with continuous use.

Streaming Data Architecture for AI Applications

Streaming architectures process incoming information instantly, instead of waiting for batch updates. This enables immediate action, whether that’s adjusting inventory levels or flagging unusual activity.

Platforms like Apache Kafka, Flink, or cloud-native services manage these flows by handling large, high-speed pipelines and delivering data directly into AI models.

Reliability comes from tackling latency, scalability, and fault tolerance. Low latency ensures outputs remain timely. Scalability ensures systems absorb volume growth. Fault tolerance keeps pipelines running even if part of the system fails.

A streamlined framework often includes:

| Step | Purpose | Example Tools |

| Ingest | Collect data streams | Kafka, Kinesis |

| Process | Apply transformations | Flink, Spark Streaming |

| Deliver | Feed AI models | APIs, Data Lakes |

This design aligns AI with operational requirements in real time.

Integrating IoT and Edge Data Sources

IoT devices and edge systems expand what real-time data can cover. Sensors, smart meters, or logistics trackers provide immediate insight into operations. Feeding these into AI pipelines closes blind spots and supports quicker responses.

Edge computing processes data closer to its source, minimizing bandwidth use and delays. For example, equipment sensors can run anomaly checks locally and send only flagged results upstream.

For effective integration, you should standardize protocols and formats. Middleware and IoT platforms bring disparate feeds into a unified structure. Security must also stay central, with encryption and access controls to protect sensitive operational details.

Real-Time Data Quality Monitoring

Even the best systems fall short if real-time feeds are unreliable. Continuous monitoring checks for anomalies, missing values, or inconsistent patterns before they reach models, preventing errors downstream.

Automated checks may include:

- Schema validation to confirm formats

- Range checks for out-of-bound values

- Drift detection for pattern shifts over time

Dashboards and alerts enable teams to act immediately instead of after flawed reports surface.

Embedding monitoring in pipelines builds trust in outputs and reduces manual clean-up later. It also supports compliance requirements by keeping a consistent audit trail.

5. Ethical and Compliance Considerations

Scaling AI responsibly means balancing opportunity with accountability. Protecting privacy, meeting regulations, and reducing bias ensure that your systems not only work but also earn trust from users and regulators.

Privacy, governance, and audit trails matter when you feed fundamental data to AI in regulated industries like finance. Platforms such as Daloopa provide verified, auditable pipelines with hyperlinks back to sources, ensuring compliance while preserving trust.

A transparent process for feeding fundamental data to AI requires compliance and traceability. Using APIs that include source links, like Daloopa’s fundamental financial data API, ensures every figure can be verified—reducing audit risk while improving adoption.

Implementing Privacy-Preserving Data Integration

Privacy can’t be an afterthought when you’re merging siloed datasets. Sensitive fields, especially in finance or healthcare, should be de-identified before integration. Techniques such as anonymization, tokenization, or differential privacy lower re-identification risks.

Following standards like GDPR and HIPAA provides structure. GDPR requires explicit consent and the right to erase records, while HIPAA enforces safeguards for health information and detailed access logs.

Practical safeguards include:

- Encryption both in transit and at rest

- Role-based access for limiting visibility

- Routine audits to validate policies

Embedding these steps early does more than “check a box”. It reassures customers their information is safe, reducing churn risk and protecting brand reputation while keeping regulators satisfied.

Ensuring Regulatory Compliance in AI Data Flows

AI systems that influence healthcare, finance, or consumer decisions often face regulatory oversight. Guidance from the FDA on medical software or EU AI rules sets expectations for data handling throughout the lifecycle.

You should integrate compliance into workflows at ingestion, processing, and training. That means tracking lineage, keeping audit logs, and verifying jurisdictional requirements at every stage.

A sample plan might look like:

| Step | Action | Example Standard |

| Data collection | Confirm consent & purpose | GDPR lawful basis |

| Data storage | Apply encryption & retention limits | HIPAA safeguards |

| Model training | Record dataset versions | FDA SaMD guidance |

Embedding compliance this way prevents costly project rework, avoids fines, and, most importantly, keeps AI initiatives moving instead of stalled in legal review.

Addressing Bias and Fairness in AI Training Data

Bias in AI threatens both outcomes and compliance. Overrepresented or missing groups in training data can produce skewed results, damaging reputation and drawing regulatory action.

A good practice is to conduct bias audits early, checking for demographic or behavioral imbalances. Tools like risk-of-bias assessment frameworks highlight concerns before models launch. Mitigation may involve balancing datasets, using fairness-aware algorithms, or creating separate models for different groups.

Bias also has organizational roots. Involving diverse stakeholders when setting objectives and validating results helps prevent assumptions from going unchecked.

Embedding fairness reviews into pipelines improves trust while producing stronger long-term performance.

6. Operationalizing Your AI Data Integration

AI data integration only delivers value when systems are adopted, measured, and scaled across the enterprise. In other words: the board doesn’t care about a pilot, they want measurable impact across revenue, churn, and efficiency.

By adopting modular systems, you also ensure future tools can connect directly through a fundamental financial data API, reducing redundancy and accelerating adoption.

Creating Cross-Functional Collaboration Models

Integration isn’t just technical, it requires cooperation across operations, finance, sales, and IT. Without collaboration, teams risk building pipelines that don’t address real needs.

One solution is creating data councils or integration task forces. These groups set priorities, approve standards, and resolve conflicts. Clear ownership prevents duplication and maintains accountability.

Shared tools like centralized dashboards or project boards make coordination easier, reducing delays and ensuring everyone stays aligned.

Measuring and Optimizing Integration Performance

After going live, you have to track performance. Technical uptime alone doesn’t reflect impact; metrics must tie back to data quality for AI systems and business use.

A framework could track:

| Metric Type | Example Measures | Purpose |

| Data Quality for AI systems | Completeness, duplication rate | Ensure AI models receive reliable inputs |

| Timeliness | Latency, refresh frequency | Confirm data supports real-time or near-real-time needs |

| Adoption | User access rates, model usage | Show whether teams actually leverage integrated data |

Regular reviews highlight areas for improvement, such as refining transformation steps or automating checks. Small changes can improve efficiency while building confidence in outputs.

Scaling Your Data Integration for Enterprise AI

As demand grows, integration must shift from isolated projects into company-wide systems. That means supporting larger volumes, multiple sources, and stronger governance.

One practical method is modular architecture. Building reusable connectors for CRM, ERP, and analytics platforms cuts future costs and avoids duplication. Standardized APIs also simplify adding new tools down the line.

Scalability also depends on clear governance. Policies for access, privacy, and compliance become more critical as more teams rely on integrated data. Combining automation with oversight creates a framework that balances innovation with control.

Turn Your Data Chaos into AI Confidence

AI doesn’t fail because of weak algorithms. It fails when the data feeding it is scattered, inconsistent, or unreliable. Scaling AI means fixing that foundation first. Once data is trusted, AI shifts from fragile pilots to enterprise infrastructure. Forecasts sharpen, risk models hold up, and operations run in sync. Leadership stops asking for ROI and starts seeing it.

The difference comes from continuously feeding fundamental data to AI pipelines with uncompromised integrity and data quality for AI systems built in from the start.

Daloopa gives you that strong foundation with automated data integration and advanced fundamental financial data API, ensuring you can feed fundamental data to AI reliably, at scale, and with confidence. Contact us to see how we can help your team move beyond pilots and unlock real ROI from AI.