You know the frustration of having critical insights buried in unstructured filings, decks, and transcripts while stakeholders demand answers right now—every hour you spend wrangling spreadsheets costs credibility, missed opportunities, and late decisions. Modern LLM-powered extraction and query layers are designed to end this cycle. They surface insights automatically and stitch them, cell-by-cell, into your working models and dashboards—with full source lineage—so you can act instead of babysitting data. Financial institutions are rapidly adopting AI and LLMs to transform data analytics, with early adopters reporting significant efficiency gains in reporting cycles.

Key Takeaways

- LLM-powered systems automate data cleaning and turn unstructured financial inputs into structured insights using natural language directions.

- These tools remove reporting bottlenecks by returning instant analysis while preserving accuracy, lineage, and evidence for compliance.

- Financial teams gain conversational access to complex analytics without specialized coding skills or long implementation windows, delivering answers in minutes instead of days and restoring hours to high-value analysis.

The Evolution of Financial Data Analytics

Financial analysis used to be a marathon of copy-paste, reformat, reconcile, and re-run. Today, the shift is not just faster tools—it’s a change in how you interact with the data: from manual preprocessing to conversational, evidence-linked analysis that respects governance and audit trails. This evolution addresses what matters most to finance professionals: time saved on data preparation, accuracy gained through automated validation, and compliance risk reduced via complete audit trails.

Traditional Approaches to Financial Data Analysis

You’ve spent long nights rebuilding a table after a PDF caused the numbers to shift columns, or convincing a manager that a formula error—not the company—caused a variance. Remember the quarterly close when a single misaligned cell cascaded into three days of reconciliation work, delaying your team’s investor presentation and forcing last-minute apologies to the board? Manual Data Processing dominated: extract text or tables, normalize units, align footnotes, and rebuild cells by hand. Statistical models helped, but only after you fixed the inputs.

That gap—between raw source and usable modelable input—created three recurring problems:

- Time drains and bottlenecks at close and earnings windows that keep you at the office when everyone else has gone home.

- Human error in formulas and manual transfers that create anxiety around every board deck and external report.

- Limited ability to fold narrative signals (guidance language, management tone) into quantitative forecasts, leaving competitive insights on the table.

You see those constraints when datasets scale, when disclosures arrive in varied formats, or when a quick “what changed?” is needed from yesterday’s filing.

The Rise of LLMs in Financial Services

LLMs change the front end of your workflow. Instead of forcing you to learn query syntax or wait for data engineers, you ask plain-English questions, get structured outputs, and trace every number back to its source. Automated extractors convert PDFs, XBRL, and slide decks into cell-level data that plug straight into models; conversational layers let you interrogate those facts and generate audit-ready commentary.

For instance, tools like Daloopa’s Model Context Protocol (MCP) enable analysts to query financial data using natural language directly within their workflow tools, eliminating context-switching and data transfer delays.

Domain adaptations—models trained on financial corpora—improve accuracy on domain-specific language (e.g., “non-GAAP adjustments,” “operating lease right-of-use assets”), while multi-modal reasoning links charts, tables, and narrative together so the answer is more than a number: it’s a sourced explanation. This matters when compliance teams need to verify every figure, when auditors demand source documentation, or when regulators question your methodology under FINRA or SEC oversight.

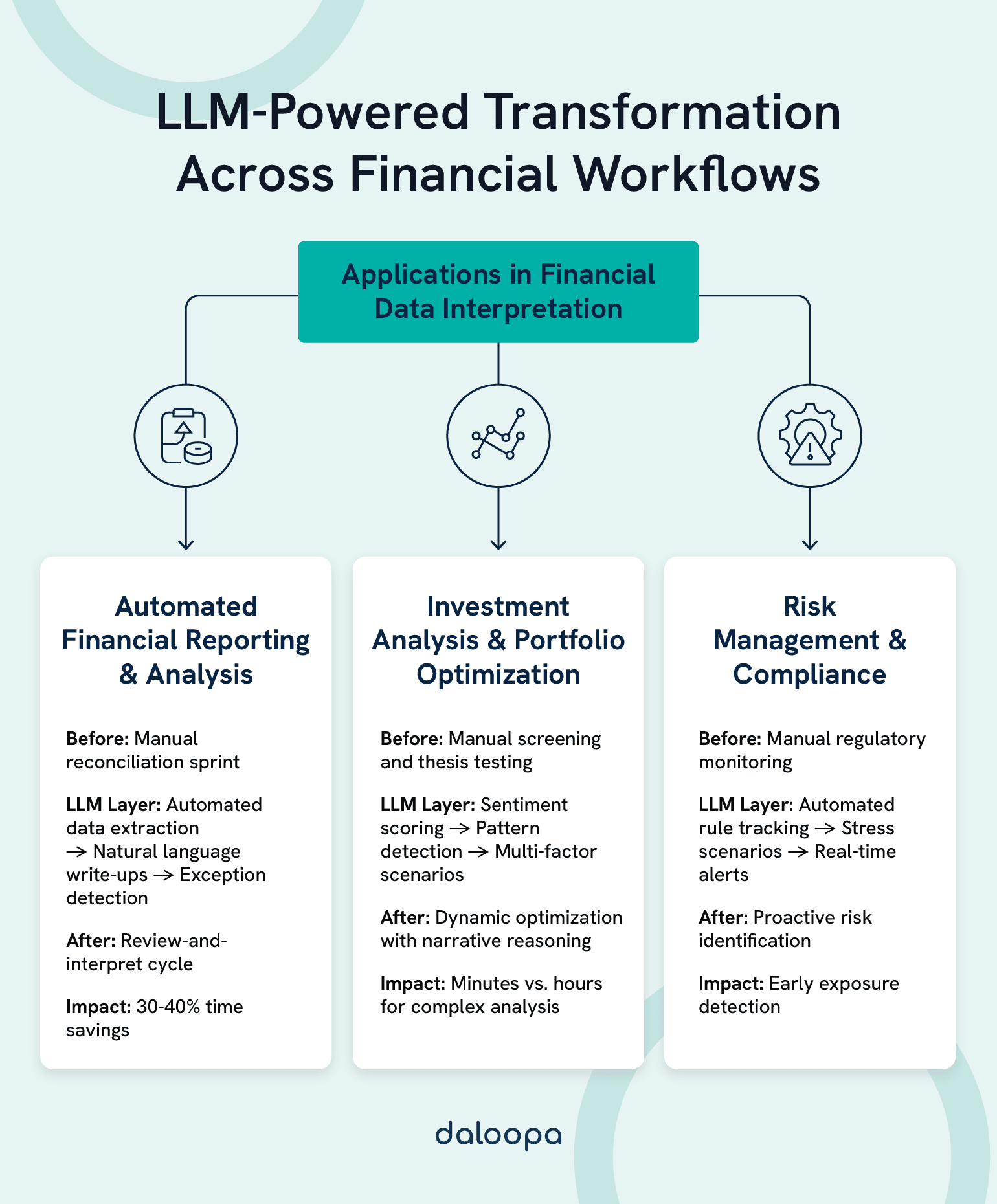

Transformative Applications in Financial Workflows

LLMs reshape how you run reporting, investment research, and risk programs—each area benefits both from time savings and improved defensibility. More importantly, they transform how you spend your day: less time on data plumbing, more time on the strategic insights that advance your career and drive business decisions.

Automated Financial Reporting and Analysis

Imagine this: It’s the end of Q3. Instead of the usual three-day sprint to compile variance reports from 15 different sources, you arrive Monday morning to find preliminary reports already drafted—complete with commentary explaining the 12% revenue increase, margin compression drivers, and flagged anomalies in SG&A. Your role shifts from data compilation to strategic interpretation, and you deliver insights to leadership two days early. This isn’t theoretical—it’s how LLM automation transforms monthly and quarterly cycles.

Systems pull from many sources, align formats, and draft standardized reports with consistent commentary.

Key automation capabilities include:

- Automated extraction from financial statements and notes with cell-level lineage to source documents

- Natural language write-ups of drivers and performance that maintain your firm’s house style and terminology

- Exception detection for unusual market or ledger movements with confidence scores and suggested investigation paths

- Interactive dashboards that accept conversational prompts like “Show me gross margin trends by segment with peer comparisons”

You can move from a manual reconciliation sprint to a review-and-interpret cycle: systems populate the numbers and draft the narrative; you verify edge cases and finalize the story. Industry work shows that automation across finance processes commonly yields 30–40% time savings in process steps—letting teams reallocate effort to analysis and strategy. Solutions like Daloopa’s LLM-powered data platform exemplify this shift, automatically extracting and structuring financial data from filings and enabling analysts to focus on interpretation rather than data wrangling.

Investment Analysis and Portfolio Optimization

LLM-powered analytics change how you screen names, test theses, and optimize allocations. Consider the analyst who needs to evaluate 50 mid-cap healthcare companies against new reimbursement policy changes announced yesterday. Previously, this meant days of reading transcripts and filings. With LLM assistance, she queries “Which companies mentioned Medicare reimbursement concerns in recent calls?” and receives ranked results with sentiment scores and direct quotes—in under five minutes. Systems synthesize market data, narrative tone, and historical behavior to sharpen decisions.

Advanced analysis features:

- Sentiment scoring across earnings calls and curated news with temporal tracking to identify inflection points

- Pattern detection in factors, flows, and sector rotations using both quantitative metrics and narrative signals

- Automated screening of prospects against custom criteria with natural language definitions like “sustainable dividend yield above 4% with management explicitly addressing payout ratio concerns”

- Risk-adjusted views with narrative explanations of trade-offs that support investment committee discussions with clear, defensible reasoning

You can pose multi-factor scenarios and receive clear, defensible output in minutes. The system weighs volatility, peer comps, and macro signals so you see both opportunity and exposure. This speed advantage matters when markets move fast and first-mover insights drive returns.

Optimization turns dynamic with constant monitoring. LLMs evaluate how new data touches your holdings and propose rebalancing with reasoning, thresholds, and alternatives to consider.

Systematic strategies benefit when narrative inputs inform signals. Models incorporate event language and guidance shifts alongside quantitative screens for more measured entries and exits. For deeper guidance on selecting and applying LLMs effectively in financial analysis workflows, see Daloopa’s financial analyst guide to choosing the right LLM for data analysis.

Risk Management and Compliance

The risk manager’s nightmare: a new Basel III amendment drops on Friday afternoon, and the executive committee needs an impact assessment by Tuesday. Traditional approaches meant weekend work combing through the 200-page document and manually mapping requirements to existing controls. LLM-assisted systems now parse the regulation, identify affected processes, highlight gaps in current procedures, and draft the impact summary, giving the manager a working document by Saturday morning and a real weekend.

Risk programs widen their lens with LLMs reading regulations, disclosures, and internal activity at scale. Systems surface potential exposures and policy gaps earlier in the process.

Risk management applications:

- Automated monitoring against changing rules and standards including SEC disclosure updates, FINRA requirements, and emerging ESG reporting frameworks

- Stress scenarios with clear narrative rationales and assumptions that translate technical risk metrics into business language for executive review

- Real-time alerts enriched with context and likely downstream effects so teams can prioritize responses and allocate resources effectively

- Assistance drafting regulatory submissions with accuracy checks and citation verification to reduce amendment cycles with regulators

Models process regulatory updates and map them to your controls, highlighting what needs revision. You see exactly which procedures, fields, or teams a change touches. This capability becomes especially valuable as regulatory complexity increases—from SEC climate disclosure proposals to enhanced Dodd-Frank reporting requirements.

Forecasting improves when diverse inputs—economic prints, sentiment, geopolitics—are weighed together. You receive risk briefs that combine traditional metrics with narrative signals in one view.

Assistants handle routine policy questions and document lookups, freeing specialists for deeper investigations. Every interaction is logged for examination and training later on. This audit trail satisfies compliance requirements while building institutional knowledge that improves with use.

Core Capabilities of LLM-Powered Financial Analytics

LLM-powered systems combine NLP, pattern detection, and conversational interfaces to let you work in plain language while preserving the rigor required for high-stakes finance. These capabilities work together to deliver what every financial professional needs: faster answers, provable accuracy, and reduced compliance exposure.

Natural Language Processing for Financial Documents

You handle filings, management commentary, and analyst notes that hide key drivers inside legalese and long-winded disclosures. You know the feeling: searching for “debt covenants” across 50 pages of a credit agreement, only to find the critical ratio buried in a footnote on page 38 under non-standard terminology.

Modern NLP pipelines:

- Automatically recognize entities and numeric fields inside SEC filings and notes with domain-specific entity recognition trained on financial taxonomies.

- Map those extracts into your taxonomies and spreadsheet templates maintaining formula relationships and update logic.

- Produce sourced Q&A so you can ask, “Where did diluted EPS change versus guidance?” and get a cell-level, cited answer complete with the exact paragraph, page number, and filing date.

That reduces tedious copy/paste and gives you reproducible evidence for every figure—critical when audit or compliance teams ask for lineage. Practical platforms already integrate continuous monitoring to push new filings into your sheets the moment they’re published. Daloopa’s API exemplifies this approach, providing programmatic access to continuously updated financial data with full source attribution.

Advanced Pattern Recognition and Anomaly Detection

Beyond extraction, LLMs plus analytics flag unusual trends and provenance issues:

- Anomaly detectors highlight disclosure mismatches or ledger movements that don’t match narrative explanations—like when management describes “steady demand” but days sales outstanding jumped 20% quarter-over-quarter.

- Knowledge graphs reveal supply-chain or subsidiary links that could affect revenue recognition or counterparty credit—surfacing relationships that traditional financial statement analysis misses.

- Predictive components weigh narrative shifts (guidance tone) with numeric trends to surface early signals of stress or opportunity—detecting when “cautiously optimistic” language appears for the first time in three years.

These systems don’t replace your judgment; they surface candidates for focused review, cutting the time you spend hunting for the needle in the haystack. Instead of anxiety about what you might have missed in a 300-page 10-K, you gain confidence that the system flagged the three sections demanding your expertise.

Conversational Financial Intelligence

You can work with data by conversation instead of rigid query builders or static dashboards. This interaction style shortens time to insight and improves adoption across the team. More importantly, it eliminates the knowledge barrier that kept junior analysts from accessing complex analytics—now anyone can ask sophisticated questions in plain English.

Natural language queries handle questions like “What drove the Q3 revenue decline?” and return structured explanations with drillable evidence. The system infers intent and surfaces relevant fields fast. During an executive briefing, you can answer unexpected follow-up questions on the spot instead of saying “I’ll get back to you.”

Data visualization can be generated on the fly. Describe what you need—a cohort comparison, a variance bridge, a rolling average—and the chart appears with the right grain and range. No more waiting on BI teams or wrestling with pivot table configurations.

The conversational layer records prompts and responses for a traceable audit trail. That transparency supports review, learning, and compliance without extra documentation work. Regulators and auditors can see exactly what questions were asked, what data informed answers, and who made which interpretations.

Real-time analysis continuously ingests updates, revising narratives as disclosures, prints, or macro data arrive. You get proactive nudges when developments impact your coverage list or models. No more anxiety about whether you saw the amended filing or the updated guidance buried in an 8-K.

For technical implementation details on conversational financial data access, Daloopa’s Model Context Protocol documentation provides comprehensive guidance on integrating natural language query capabilities into existing workflows.

Implementation Strategies and Considerations

Deploying these tools successfully is as much about governance and change management as it is about models. The teams that succeed don’t just install software—they rethink workflows, train users, and build trust gradually through proof points.

Integration with Existing Financial Systems

You should phase deployments: start with extraction and commentary support, then extend automation into close workflows and forecasting. Begin with low-risk, high-frustration tasks—like extracting footnote disclosures or summarizing earnings call transcripts—where errors are easy to catch and time savings are immediately visible. Build credibility before automating mission-critical close processes.

Integration priorities:

- Pipelines that normalize unstructured inputs into your taxonomies, ensuring GAAP vs. non-GAAP classifications match your firm’s standards.

- APIs connecting extraction services to warehouses and Excel templates using webhooks to trigger model updates when new filings arrive, not manual refresh processes.

- Evidence-tracking so every automated cell links back to source text or XBRL, satisfying auditor requirements for data lineage without additional documentation burden.

Hybrid architectures—keeping rule-based validations active while layering LLM outputs on top—let you preserve controls and gradually shift trust as the system proves itself.

Addressing Challenges and Limitations

No model is perfect. The difference between implementations that deliver value and those that become expensive distractions lies in honest acknowledgment of limitations and robust guardrails.

Common guardrails you should implement:

- Human-in-the-loop review for anything that affects balance sheets or disclosures — specifically, any automated output that flows into SEC filings, board materials, or investor communications must have named reviewer sign-off.

- Multi-model reconciliation to detect model drift or “hallucination” cases i.e. running the same extraction through two different LLM providers and flagging discrepancies for manual review.

- Confidence scores and flags for low-certainty outputs that surface uncertainty transparently so analysts know when to verify vs. when to trust.

- Routine bias and fairness audits (important in credit and fraud contexts) especially as fair lending regulations and algorithmic accountability frameworks evolve.

Security deserves equal emphasis: protect data with private-cloud or on-prem deployments where necessary and align processing with sector regulations and internal data classification. Many financial institutions require that material non-public information (MNPI) never leaves controlled environments—architect your LLM integrations accordingly with on-premise or dedicated-tenant deployments.

Organizations seeing strong ROI typically establish a cross-functional governance committee (finance, IT, compliance, risk) that reviews use cases, sets risk tolerances, and approves rollout stages. This prevents both over-caution that delays value and recklessness that creates compliance incidents.

Future Directions and Emerging Trends

The technology trajectory points toward even tighter integration between financial workflows and AI capabilities, reducing latency between “question asked” and “decision made” from hours to seconds.

Multimodal Financial Analytics

Systems will increasingly read charts, tables, and text together: you’ll ask about a revenue bridge and get an explanation that links the graphic, the underlying table, and the filing footnotes. That reduces back-and-forth and gives you explanations grounded in source material. Imagine asking “Why did gross margin compress?” and receiving a response that highlights the relevant chart from the earnings deck, the supporting table from the 10-Q, and the management explanation from the transcript—all in one unified answer with citations.

Leading financial institutions are piloting multimodal systems that can analyze non-textual information like charts in investor presentations, signatures on contracts, and even video segments from analyst days to extract sentiment and commitments. This expands the analytical surface area beyond what traditional text-only NLP could address.

Specialized Financial LLMs and Domain Adaptation

Purpose-built models and finance-tuned pre-trained models provide higher domain fluency—better sentiment, entity recognition, and disclosure parsing—especially when fine-tuned on proprietary corpora. Generic LLMs may confuse “EBITDA” with “adjusted EBITDA” or misclassify operating vs. financing cash flows. Domain-specialized models trained on millions of financial documents understand these nuances and maintain accuracy on technical language.

Pairing those models with your own data (fine-tuning or retrieval-augmented pipelines) improves accuracy while keeping outputs interpretable and aligned to your KPIs. Organizations building proprietary fine-tuned models on their historical analyses, deal memos, and investment theses create a compounding knowledge advantage—the system learns your firm’s analytical approach and gets better with each interaction.

Expect continued specialization: credit-focused LLMs optimized for covenant analysis, equity research models trained on thesis-building patterns, and treasury-specific systems fluent in hedging instruments and liquidity management. This specialization delivers the accuracy financial workflows demand.

Transform Your Financial Data Interpretation With Daloopa

Cut the spreadsheet treadmill. Picture your next earnings close: instead of three days extracting data and two more reconciling discrepancies, you connect a single filing to your model, ask a plain-English question like “Has the effective tax rate guidance changed and by how much?”, and watch the cells, commentary, and evidence links populate your workbook in seconds. The filing that used to consume your Tuesday and Wednesday now takes 20 minutes to validate and interpret on Monday afternoon. You spend Tuesday and Wednesday testing the thesis everyone’s been asking for—the strategic analysis that advances deals, informs investment decisions, and elevates your role from data processor to insight driver.

Daloopa’s continuous extraction and natural-language query capabilities deliver:

- Time reclaimed: Analysts report recovering 10-15 hours per week previously spent on manual data tasks

- Accuracy improved: Cell-level source attribution eliminates “where did this number come from?” investigations

- Compliance simplified: Complete audit trails from source document to model cell satisfy regulatory requirements automatically

Explore how Daloopa’s LLM integration can turn your next earnings sprint into an analysis sprint. Connect with financial data through natural language queries via MCP or integrate continuously updated data through API.

The question isn’t whether LLM-powered analytics will transform financial workflows—it’s whether you’ll lead that transformation at your organization or watch competitors pull ahead while you’re still manually reformatting PDFs.